Culture

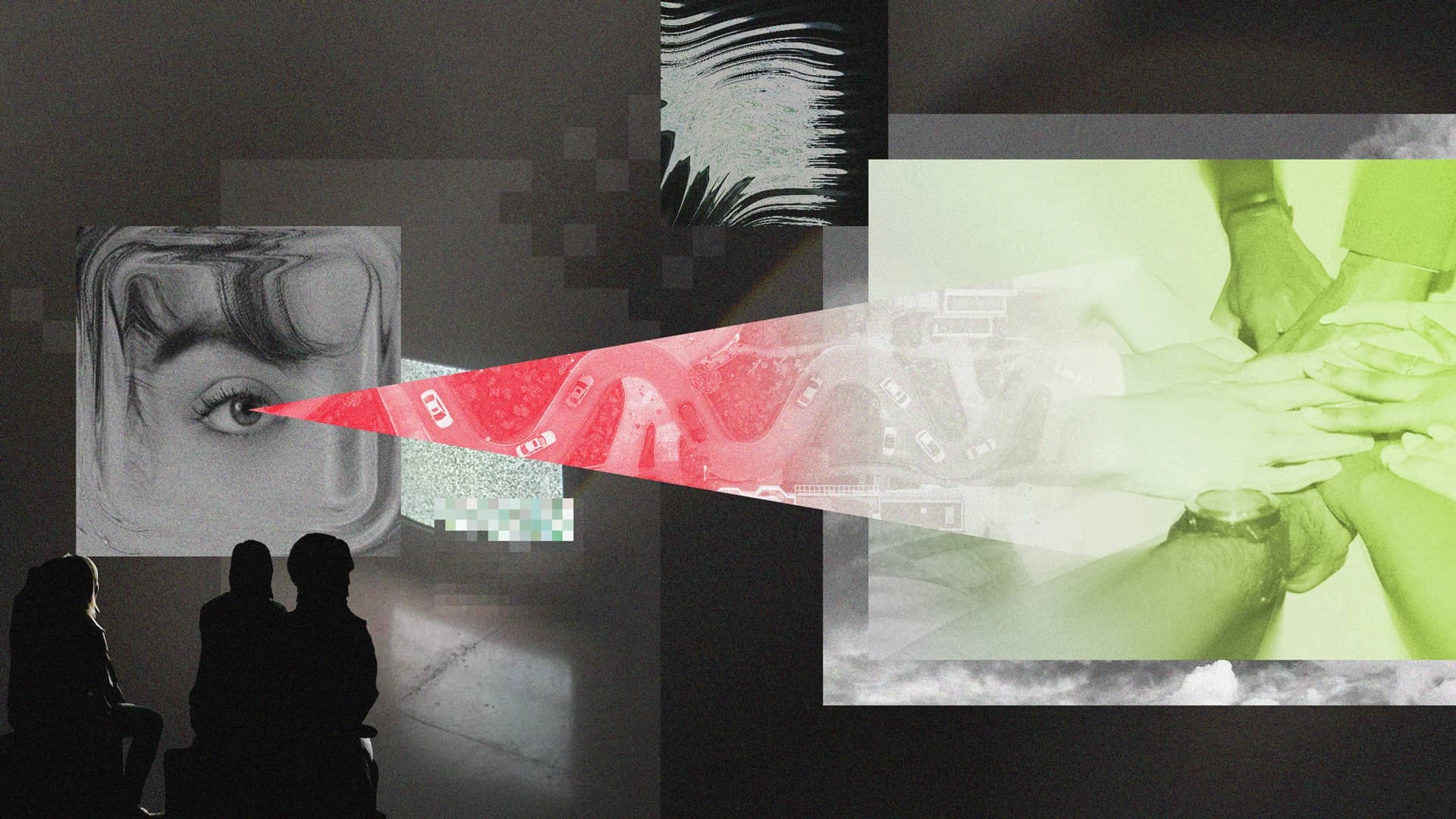

It’s OK to See Red: The Hidden Value of Negative Experiment Results

Learn more

As part of Eppo’s new Humans of Experimentation conversation series, we sat down with Ricky Saporta, the Senior Vice President of Data at Entera.AI and publisher of the Data Product Management newsletter on Substack. In past roles, Ricky has led and built data teams at The Farmer’s Dog, The Orchard, and VYDIA.

In this conversation, Ricky explains:

I have been fortunate enough to lead and build data teams multiple times in my life. Currently, I am at Entera.AI, a trailblazing and innovative company sitting at the intersection of PropertyTech and FinTech.

Prior to that I helped build the Data Strategy and Insights team at The Farmer’s Dog, which offers fresh food and treats for dogs delivered directly to your home.

Before making dog food, I had spent many years working in data in the music industry. I built and led the data teams at The Orchard, which was acquired by Sony, and VYDIA, which was recently acquired by Larry Jackson’s Gamma, and before that, I did a brief stint at Next Big Sound which was later acquired by Pandora.

My entry into the data industry though was in sales and support of a BI-tool named Phocas. This was in the days before the cloud, and this young UK-based company was entering the USA market. Many of the customers who were purchasing our product were midsize companies that had been around for decades; some were older than the digital computer. They had learned to run their business using pen and paper, and for most of them, their data was purely operational. They had never been able to do analysis with it before then. The primary users of our software were business executives, and my job was to sit alongside them and teach them how to use our tool. They would ask me questions about their business, and I would build out on-the-fly sitting next to them, which of course led to follow-up questions and discussions; this was the first time they were seeing their data like this! I was teaching these seasoned execs how to do deep dive analyses and showing them all the questions that they could now answer, and meanwhile, I was getting a crash course in how each of our customers ran their business and what their most important questions were! It was a hell of a way to jump into the deep end of the data industry.

In the years since, I’ve done everything from data science to data engineering, business intelligence, and of course building data products that go directly into the hands of customers. My driving passion though has always been decision-making — not only how humans decide, but how they understand their own decision-making process. This is also why I love data product management so much. It’s all about how we enable decisive actions.

The mistake is blindly following data, or worse, relying on the data to tell you where to go. Data cannot answer this. You need to know where you’re going. Data can tell you how to best get there. Data can point to paths you didn’t know existed, and it can give you the confidence to act, to go. But it cannot tell you where your destination should be.

I’ve seen data misapplied in both extremes. You have the folks who see a report and say, “the data is clearly telling us to do X, so let’s go! We’re doing X!”

At the other extreme, you have the folks who have already made up their mind about what they’re doing before even seeing the data. These folks will either point to a shortcoming in the data and use that as a reason to ignore it all, or — and this is way worse — structure the analysis in a way that supports the decision they already made.

Counter-intuitively, both of these situations stem from the same human bias, and are both alleviated in the same way: with the decision maker becoming more aware of how the data works, what can be done with it, and how to use data effectively to inform decisions.

I’ve come to prefer the term “data-informed” instead of “data-driven,” because the subtlety of “inform” vs “drive” has an effect on the data culture of the organization. This is not about semantics. It’s about how we understand the role and purpose of data.

We have to be thoughtful and deliberate in how we apply the use of data.

In my opinion, data has one, and only one, purpose, which is to enable better decisions about what to do. In other words, to improve decision-making.

Folks will say that data is about outcomes. And sure, that’s true. But what group in an organization is not about outcomes? Manufacturing is about outcomes. Customer service is about outcomes. Education is about outcomes. Raising kids is about outcomes.

Saying “Data is about outcomes” is what I would call TBU, true-but-useless. We need to ask “How exactly does data produce outcomes?”

Data is about decisions, it’s about what to do. And, specifically, it is about unblocking those decisions so that we can act.

If we are using it properly, data unblocks a decision which has an action, the action produces an outcome, and — assuming our work was correct — the outcome brings us closer to our larger goal.

If you want to know if a company is using data effectively, look at their meetings. A tell-tale sign of teams using data effectively is that they end meetings with a resolve to act and extreme clarity of what exactly they have to do.

When we talk about improving decision-making, we can improve the decision itself or the making of the decision. Improving the ‘making of the decision’ looks like: Less deliberating, less walking around in circles, and instead a resolve to act. Often, the biggest reason for procrastinating is a lack of information. How many times have you seen a meeting end with “let’s set a follow up meeting.” For what? Why? What is going to be different from that second meeting? Will it end in needing a third meeting?

We need to look at this first meeting and ask “What decision were we hoping to make, and why were we not able to make it?” Then, if you follow this thread, and keep asking why, you will undoubtedly find that there is a big ol’ unknown sitting beneath all of the swirly, back-and-forth discussion.

If instead, you’re able to identify that unknown, gather the data and insights that are needed to resolve the unknown, and then have the meeting, you will find that your meeting ends rapidly and it ends with a clear plan of action. Keep this up, and pretty soon you don’t even need to have meetings anymore!

If you want to know if a company is using data effectively, look at their meetings. A tell-tale sign of teams using data effectively is that they end meetings with a resolve to act and extreme clarity on what they have to do.

So this is one way that data improves decision-making. It improves the process, and reduces the theoretical KPI, “time to decision.” In other words, we get to the right decision faster.

The other way data improves decision-making is that we improve the decision itself. We can think of “deciding” as selecting between any number of possible options. Improving the decision means selecting a better option than we otherwise would have.

Let’s say there are five different options, and our gut says to choose Option C. Then, the data-analysis suggests that Option D is better because of such and such a reason and leads to better outcomes. It’s not necessarily that Option C is a bad choice, but rather that there is a better choice available, and the data helps us to understand that and act.

We need goals, and we need a value-metric.

Implied in all of this is that we (and by “we,” I mean the stakeholders plus the data team) have two things clearly identified: A clear goal and a way to measure progress towards it. The latter can often come from the data team, either directly or via collaboration. Whereas the goal should come from the team leadership, although really great data practitioners will be helpful thought partners.

I personally like the metaphor of a mountain summit as a goal. The various paths to the summit are the decisions we make along the way. If there is only one possible path that leads to the summit, then there is no decision to make, and no need for data. Just go ahead and get moving. But that is rarely the case. If you’re summiting a mountain, you are going to encounter forks in the road where you will need to decide which way to go; you will encounter rivers and crevasses that you need to navigate. The data team’s job is to help guide the larger team and the stakeholders. Which direction should we go? What’s the best way around this obstacle?

The moment you have more than one option available, you need to figure out how to choose between those options. That’s where data comes in. Not just data, but the thoughtful application of it.

So you need to have some sort of goal. We need to figure out what paths will take us there, and then how to decide between them.

If we have several options, we need a way to compare those options. This is not just which one brings us closer to our goal in the big-picture sense of it. Meaning, we’re not looking only at the short-sighted output, but considering the tradeoffs we will have to make in the future, and asking “overall, which option has the highest degree of total success?”

We want to avoid oversimplification, though. Every decision comes with tradeoffs, and we need to fully understand those tradeoffs. So if we're increasing some metric, what else is going on in that ecosystem?

We need to be able to understand the entirety of that path, and not just one single metric.

Let’s focus again on the decisions. We have a goal we want to get to, we have a way to measure our success. How do we choose between different actions we can take?

We need a way to know what is going to happen as a result of our actions. What we are asking for here is literally to be able to tell the future. From one point of view, this is an impossible task. On the other hand, we can observe the repeated nature of things and make useful inferences about the future. In other words, we can use our data skills to make effective predictions of the future.

There are two important caveats here: We of course need the right skillset, and we need the right data.

This is actually why I love what your team is doing at Eppo. Experimentation is critical, yes of course. But Eppo is doing something novel and unique in your approach to what I would call “the thinking problem.” You are going deeper in that you’re helping data stakeholders form more effective mental models, and all the while, you are guiding them in how to think about the interpretation of the data. You’re upskilling the team in their data literacy, and you’re doing it ever so subtly. When Che Sharma showed me the product I was quite blown away.

But back to your question, how does the data team actually help bring about impactful outcomes? By guiding stakeholders and decision-makers as to which decisions are best. Which decisions will lead to the outcomes we most want to live with. The recipe here is that the group has to collectively form a model of what happens in their corner of the world when you take certain actions. This can be larger questions, such as, “what if we lowered our prices 10%?” or “what if we offered free overnight delivery?” to small minutiae such as, “what if we added a hint of turquoise to our logo?”

The best tools don’t just do, but teach.

So let’s start with the data.

We either already possess the data we need, or we don’t. If we don’t have it, we can get it in one of three ways:

If we plan to gather the data through experiments, some level of data expertise is remarkably helpful. A great data partner will help structure the experiment so that when you get to the other side of the experiment, the results are applicable to the decision at hand, and they will also help think through the analysis before we do it.

And so here is an example of the right skillset. I call this skill Thinking in Data. It’s the ability to see how the real world shows up in recorded data and how the data represents the real world, which then enables you to understand what decisions the data can — and cannot — help you with. Notice though that this skillset is not unique to data practitioners.

The democratization of data does not just mean access to dashboards and abstracted metric layers. Democratizing data, to me, is about enabling every person in the org or every customer to make better decisions and gain a better understanding of the world. Once again, here is where I think Eppo is really getting it right. Enabling anyone in the org to design and manage an experiment is huge. The best tools don’t just do, but teach. The way Eppo guides you is fantastic. Not just in the analysis, but in the design. Any time a tool helps elevate the skillset of the org, I get psyched.

In my view, every single stakeholder and decision maker in the organization should be able to set up their own experiments. Any organization that is breaking any new ground needs to always be experimenting. By definition, we don’t know what is going to happen, and so we experiment to help fill those gaps. The more that the organization is regularly experimenting, the smarter and more capable the entirety of the org becomes.

And if everyone is experimenting regularly, that frees up the data team for where they are most useful: the big deep dives and robust explorations. And when needed, the data team can verify experiment designs; although in my experience, as the team becomes accustomed to experiments being a constant, regular part of their workflow, the verification process itself becomes more democratized and no longer has to go to just the data team.

The most important question to ask when reviewing an experiment design is, “Will this data inform the decisions that we think it will?”

Even in this verification process, the goal shouldn’t be to have zero errors. The goal should be that the mistakes be low-risk. The faster we can iterate and try again the smaller our risks become. Why is that?

Risks in experimentation come from two sources: the treatments and the design.

Generally, the biggest risk is that one of the treatments ends up having an adverse effect.Here, we always want to sincerely ask, “Who or what else can be impacted, perhaps inadvertently? What is the worst possible thing that can happen? What is not the worst case but would also be bad? How does this align with our ethics and values?” This is not at all about the experiment or the experiment design, but about the treatment which has been conjured up.

But experimenting emboldens us to take risks and try new things — as it should! That’s the whole point. There is risk in the real world, and by experimenting, we are trying to put a box around the risk and keep it contained and limited. But experimenting does not magically dissolve the risk, and we have to always be mindful when deciding to test a treatment option.

The other major risk in an experiment is that data we collect ends up being useless. In other words, all the time and effort that we’ve spent setting up the experiment, plus all of the alternate treatments and the costs of those treatments, have all gone to waste. I want to be extremely clear here, this is not about the numbers being inconclusive or showing us negative outcomes. While that might not be the outcome we hoped for, it is still valuable information.

As a side note, I’m a strong advocate of scientists publishing negative results, and we should embrace the results that were not in our favor, whether we’re talking about general scientific research or within our orgs.

The risk I am talking about is where the experiment is not designed properly, and ultimately you can’t use that data to guide your decisions. Or even worse, you do use the data when you shouldn't! We have to structure the experiment so that when you get to the other side of the experiment, the results are applicable to the decision at hand. The most important question to ask when reviewing an experiment design is, “Will this data inform the decisions that we think it will?”

If you interview with me for a role as a Data Product Manager, you’re going to be asked a lot about experimentation. In order to be an excellent Data PM, you have to have really great PM skills, experimentation being one of them. But then, within the realm of data product management, experience with experiments becomes much more crucial since so much of what we do as Data PMs is help others apply data effectively.

So, there are two questions I always ask when interviewing someone for a Data PM role:

Part two of this conversation, which focuses on the links between generative AI and experimentation, will follow later this month.