Culture

It’s OK to See Red: The Hidden Value of Negative Experiment Results

Learn more

TL;DR:

One of the tragedies of in-house tooling is that they don't get design resources. It's difficult enough to get any resources at all for internal tooling, but while there are data tools engineers and data platform PMs, it's exceedingly rare for a company to have internal tools designers.

This especially affects the experimentation landscape. An unfortunate market failure of commercial experimentation tooling has led to most companies building in-house vs. buying. But most of these in-house tools aren't creating the organizational buy-in that data teams want. I still constantly hear data workers say "I wish more teams and product launches would use AB experimentation" or "I wish there was more of a culture of experimentation at my company."

The problem is that culture change requires scaling the workflow far beyond the headcount in your experimentation tools team. And this means interfaces that are designed for reaching beyond the data team.

The whole point of an experimentation process is to make better product decisions. But most experimentation interfaces don't let good decisions come easily. They typically spew out every related number in a big grid, and lean on the reader's metrics and statistical training to sort it out, which really means pulling an analyst in for every decision.

My take on how to fix this comes from formative career experiences at Airbnb and Webflow, two companies that are famous for their design culture. Of the design tenets that I saw at Airbnb, the one I feel is most grossly underrepresented in data tools design is visual hierarchy.

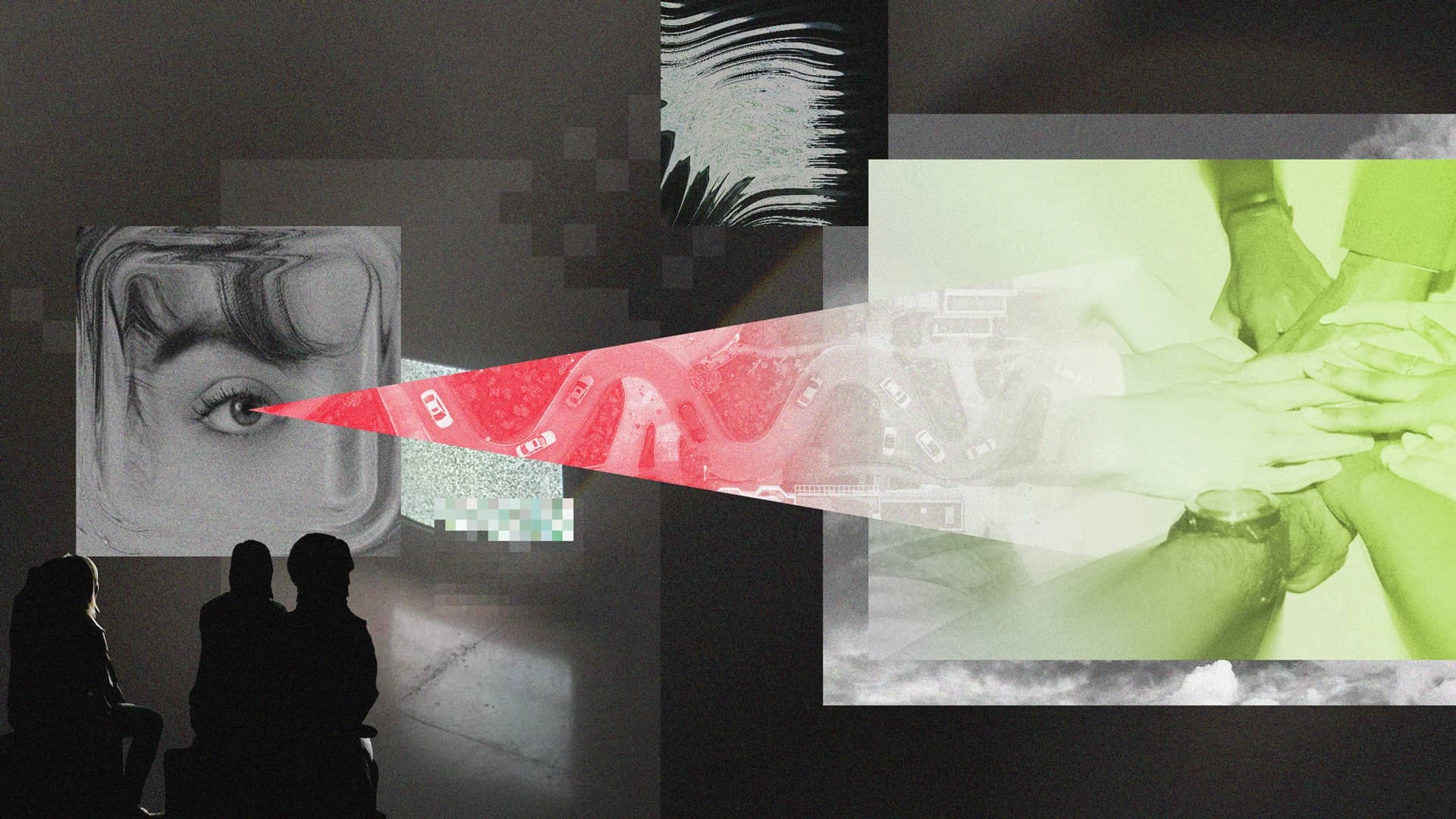

Trained designers probably have a better way of explaining visual hierarchy, but my amateur process is to guide the reader's eyes. When we design interfaces at Eppo, I usually want to know, "Where should their eyes hit first? What is the first step of absorbing this content?". The first object to fall in a reader's gaze is almost always:

I know you didn't read this blog post to learn how eyes work, but just try this framework with your internal tools. When someone opens it up, does the visual hierarchy match the jobs-to-be-done ordering of content? If a layperson opened the interface, would they naturally be drawn to the most important places, and arrive there with context? Does the reader get drawn to the interactions that will add value and help them converge on a good decision?

Based upon what I've seen, the answer is definitively not. More likely, the reader will see a huge table of metrics (ie. > 100 rows), often buried between code blocks of a jupyter notebook. If there's a dashboard, some of those metrics might flash green or red. But the commonality is that the PM is going to call an expert (data worker) in to actually make a decision, reverting to the hand-holding process.

I can empathize with why most tooling fails this test. The interfaces are being pulled in multiple directions. Data workers want access to the raw statistical guts, while PMs want clear cut decisions and widely presentable results. The reports need to both be clean for reading but full of interactivity for slice and dice investigations. It's a tough domain for establishing visual hierarchy, and even experienced UX designers can find the interfaces tricky.

However, the most common scenario is simply no interface at all.

In our user research conversations, I am continually struck how many data teams don't have self serve experiment reports. There are a ton of teams out there who have data workers running their own process, who then copy/paste the results into a googledoc. These same data workers then wonder why more teams don't run experiments.

The problem is that this process doesn't empower product teams to champion experimentation. They have to put an incredible amount of trust in the data worker's ad hoc workflow. Even if they do that, they then report the results to a product leader who has to have the same trust from multiple degrees away. And when there are inevitable follow up investigations, teams have to trust that a data worker will respond quickly and correctly.

Contrast that with an auto-generated report that uses vetted code and established methodologies, and that product teams can check at any time, share across the org, and self serve all follow up investigations. Suddenly the team can own experiment execution, find their own learnings, and dazzle other teams when they get wins.

Again, I know you didn't read this blog post to learn that dashboards are helpful. But experimentation is a high trust, high consequence problem domain. It requires broad organizational buy-in, for cross functional actors to have confidence to declare winners and losers, and for everyone to not just know "what" but also "why". For this, well designed, self serve interfaces are a necessity.

We consider design to be core to everything we do at Eppo. We want to build interfaces that lower the cognitive load for running and understanding experiments, that treats seriously the need for non-technical users to confidently make decisions. Experimentation is cross-functional, requiring PMs, engineers, and designers to all understand the process, and ultimately become champions of experiment culture.

Airbnb showed the world how thoughtful, human design can build trust in a previously unthinkable business model. At Eppo, we want to build trust in a methodology that can feel inaccessible to most of the teams using it. It's time for experimentation to get a major design facelift.