Culture

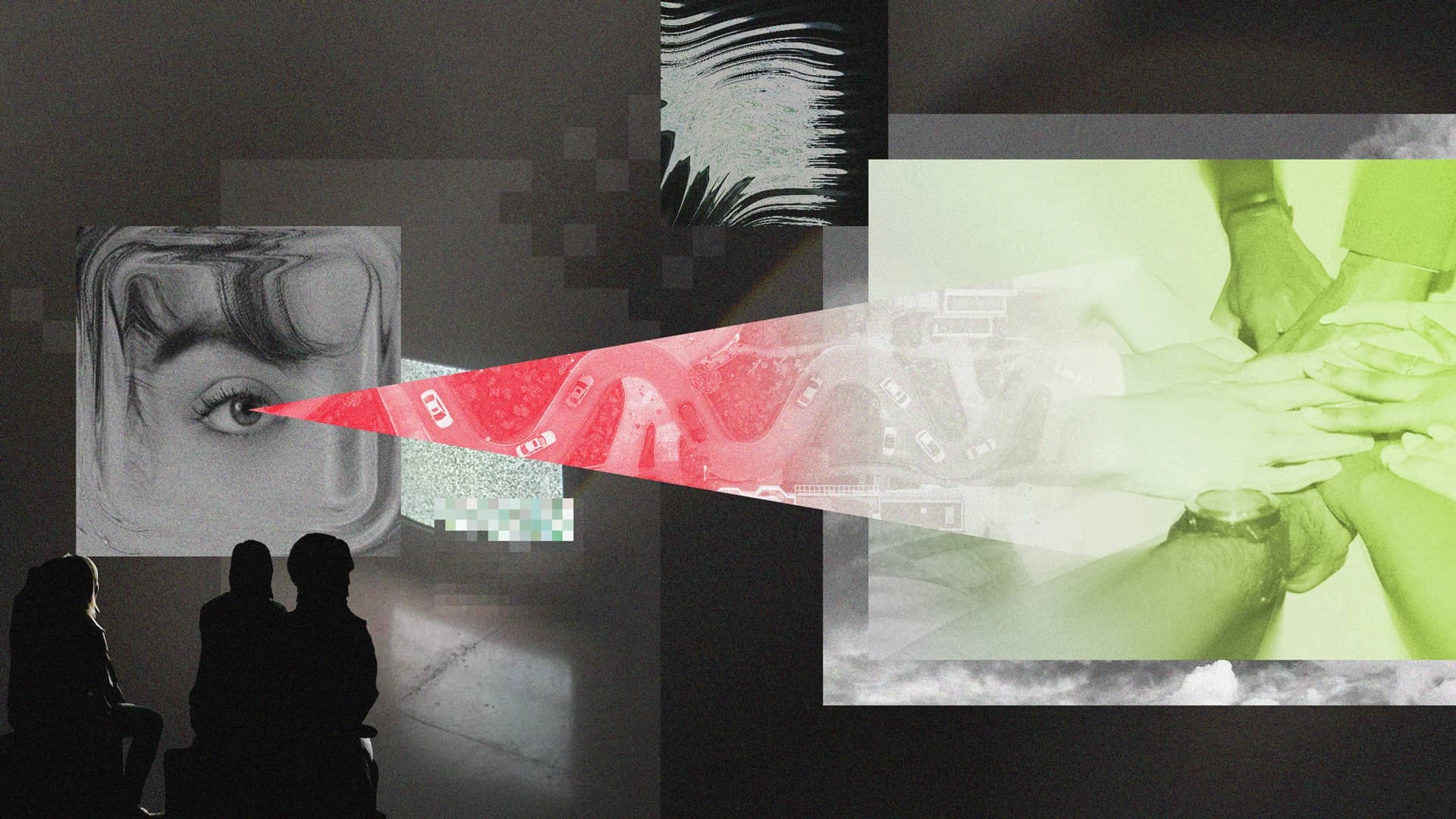

It’s OK to See Red: The Hidden Value of Negative Experiment Results

Learn more

My formative data science background was at Airbnb. I joined in 2012 as the 4th data scientist, and left in 2017. Over that time, we collectively produced Airflow, our Experimentation Platform (ERF), and The Knowledge Repo. Most importantly, we had easily detectable impact that lifted the brand of the team internally and eternally.

The most important piece of that journey was our experimentation platform, ERF. And it's not just me saying how important experimentation was, you can read this feature of Riley Newman, the original head of the data team to see a similar takeaway. Experimentation plugged the data team's work into the ethos of the company. Suddenly, metrics mattered.

And it's not just Airbnb. For every data team that has demonstrated clear ROI, look at the resources spent. You can read reams of articles about the experimentation practices at Airbnb, Netflix, Spotify, Facebook, and Uber, or even earlier companies like TubiTV and GetYourGuide. Whether in infra development, compute expense, or worker hours of procedural analytics tasks, these companies heavily invest in pervasive experimentation.

An emergence of a modern data stack has led to an explosion in the number of data teams. These teams easily spin up reporting capabilities, but their contributions are limited to data pulls for board meetings and post-hoc rationalization for product teams. It's easy to serve numbers to teams, but it's hard to find situations where those numbers change anyone's thinking.

How can data teams more meaningfully affect the org, and deliver ROI that clearly justifies the presence of the team? The key is experiments.

To start, every data team should remember why they exist: improved decision making. Most data worker headcount is dedicated to analytics work. Analytics doesn't produce dollars via products that you sell. It comes transitively, from better decisions that lead to products that sell. Across every data team the ultimate goal is to define good decisions in the voice of the customer, ie. via metrics.

The problem is that metrics reporting doesn't itself lead to good decisions. The CEO might be happy knowing how business KPIs moved, but these KPIs don't do much for product teams. Given a revenue dashboard, a product team's only response is to pray that revenue goes up. There's no connection from revenue to product. It's not enough to know that there was an overall 20% YoY increase in revenue, you need to specifically know which product decisions increased revenue.

Data teams need a decision layer. Just like data teams establish a ground truth of metrics, they need to establish a ground truth of decision quality. Good decisions incontrovertibly improve the customer experience. They are immortalized in tactics to emulate, teams that get resourced, and people that get promoted. When people tell the story of a company's growth, it's usually in the form of good decisions that were made, and any analysis that doesn't lead to a decision is ultimately forgotten

Generic metric dashboards rarely show which decisions were good or bad. This is because of two reasons:

The experimentation practices at Airbnb, Spotify, and Netflix simultaneously solve both problems. They seamlessly isolate a product change from every possible effect, while providing analytical capabilities to separate the quality of product thinking from the quality of engineering. And they deliver these capabilities publicly and widely, aligning the entire organization on common interfaces and centralized practices.

In effect, the tooling at these companies don't democratize data computations, they democratize good decision making.

At Eppo, we believe that experimentation is a skeleton key to unlocking a culture of metrics. Companies start data teams out of an aspiration to be more quantitative and scientific. But hiring data scientists won't itself lead to a culture of empirical thinking. To have impact, data scientists need an experimentation platform to inject metrics into the product development process.