Culture

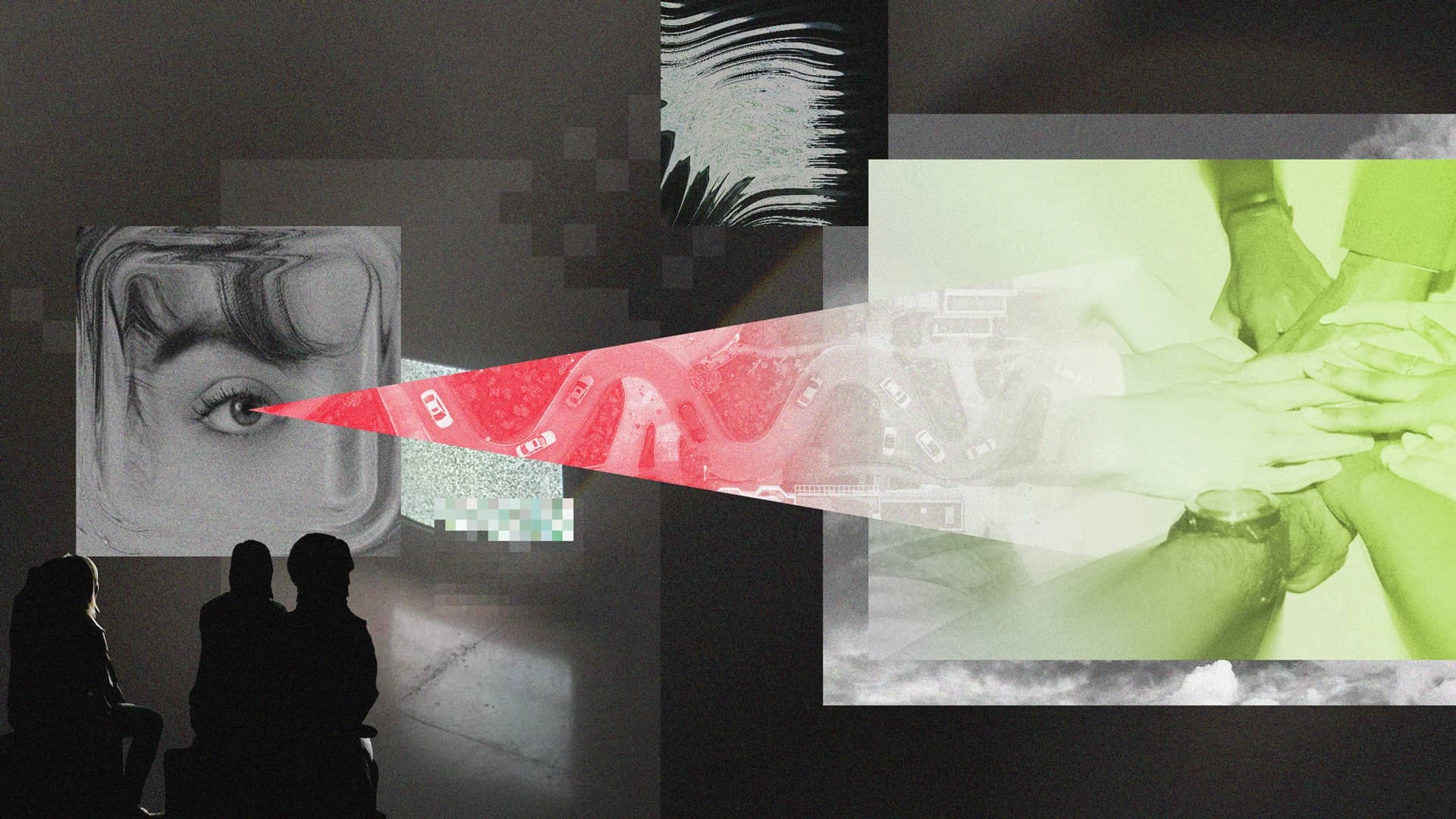

It’s OK to See Red: The Hidden Value of Negative Experiment Results

Learn more

To us Data Folks, the value of experiments seems incredibly clear. Experiments allow us to establish causal relationships between a product change and a metric with statistical rigor.

But one of the most valuable lessons I’ve learned as a Data Scientist is that other folks aren’t as quick to understand and appreciate the value of a well-oiled experimentation culture. (Some people just don’t have the same appreciation for the Central Limit Theorem as you do, can you believe it?!). And until you can convince others of its value and build a culture around it, you’ll be left building dashboards that never affect a decision.

As a Data Worker attempting to build an experimentation culture, the task at hand can seem daunting. But in my time building experimentation cultures at companies like Intercom, Loom, and ChowNow, I’ve developed a few tips that have gone a long way in rallying folks around a data-driven experimentation culture.

Tip #1: Assume Nothing, Educate All

It is essential to meet your stakeholders where they’re at in their experimentation journey. Most of the time, it's worth starting any education from zero. There are likely some misunderstandings or assumptions that need to be cleared before getting to higher-level topics.

This is why I always recommend holding educational sessions with any and all stakeholders of the experimentation process. In these sessions, you should provide a high-level overview of the essential concepts:

These concepts should all be connected to practical examples within a business context. Clear connections between the math and real business value must be made.

Additionally, you should help folks understand what previously unanswerablequestions can now be answered with experimentation. Folks don’t need to leave this session being able to recite the definition of a p-value, but they should understand that experiments allow them to quantify their certainty in a hypothesis and understand the tools to do so.

Tip #2: Embed Experimentation into the Planning Process

Once you’ve convinced your stakeholders that experimentation is valuable and educated them on the core principles, work towards embedding experimentation into their planning processes. Because experimentation is new, it’ll likely be an afterthought initially. Don’t take this personally. We are all creatures of habit.

They’ll go about their sprint-planning exercises without you, remember that session you held on the value of experimentation, and then ask you if you can run one of those “experiment things” a few hours before they launch their new feature. You’ll be left scrambling to ensure that feature flags have been defined properly, a reasonable effect size can be detected within an acceptable amount of time, and that all events you’ll need for analyzing the results of this experiment are instrumented. This will naturally lead to execution issues but will help to build the experimentation muscle.

Over time, you should become embedded in your stakeholder’s sprint-planning process. Once embedded, you’ll work directly with your PM early in the planning process to understand what they want to test, how they want to test it, and what is required to test it so that resources can be devoted to implementing this as a part of that sprint. This will allow you to arrive at launch day fully confident that everything is in the right place.

In my previous roles, I’ve facilitated these planning discussions with an ‘Experiment Planning Template.’ This contained questions that would nudge PMs to think about all the important questions that needed to be answered upfront to avoid execution issues. Although this will be difficult for them initially, it’ll become second nature after a few experiments.

Tip #3: Make Experimentation a Part of Every Decision

Once you’ve established your place in the planning process, you’ll want to ensure that experiments are also included in the decision-making process. Although this sounds obvious to us, it isn’t always obvious to others. Initially, stakeholders might simply see experiment results as an addition to the appendix of their PowerPoint slides announcing the release of a new feature.

The expectation should be set that experiment results will always be considered when a shipping decision is being made. In my previous roles, I facilitated this by drafting an experiment report that’d be presented to all stakeholders involved in the decision-making process. By taking the burden of interpretation onto yourself, your PM will be more likely to begin using experimentation as an input into their decision-making.

In these reports, I’d provide both a high-level executive summary as well as a detailed summary of results. This allowed decision-makers with little time to quickly grok what they needed to know to make a decision, while also giving them the freedom to dig into the results more deeply if so desired. Additionally, it simply signaled that I’d done my due diligence on analyzing this experiment and wasn’t taking aggregate results at face value. This subtly builds trust with those stakeholders across time.

Tip #4: Begin with the End in Mind

As you begin to run more and more experiments, it is important to step back and ensure that these experiments have a clear connection to business goals and objectives. This means that all hypotheses and core metrics should clearly connect to business goals. If there is a company goal to increase daily active users, you should ensure that metrics related to daily active users are the focus of your experiment. If there is a company goal to increase visitor-to-trial conversion, all experiments should have visitor-to-trial conversion at their center.

By connecting your experiments to key business objectives, you’ll create an intrinsic interest from folks beyond those within your immediate sphere of influence. This is becauseclear, demonstrable impact leads to promotions.With these new experiments, they’ll be able to take those results to executives and demonstrate clear ROI. This creates a feedback loop that will push folks to ask for more experiment results. Your boss, your stakeholder's boss, and your stakeholder's boss’s boss will all be interested in seeing those latest experiment results.

Tip #5: Minimize Time-to-Insight

Once you have the attention of both your immediate stakeholders and their bosses, it won’t be long before they inevitably start asking why it is taking so long to run these experiments. The business will always want to have those experiment results yesterday. And although the laws of statistics can’t really be bent to make that happen, there are a few things that can help decrease runtime and reach statistical significance earlier.

First, smart metric design can go a far way in reducing variance. Whenever possible, avoid using metrics that are sums and counts of random variables with long-tailed distributions. These random variables typically have extremely high levels of variance. Instead, craft derivatives of this metric that essentially measure the same thing but without all of the unnecessary noise in the tails of the distribution.

For example, when I was at Loom working with the Growth Team, we had a goal of getting more folks to both record and watch Looms within their first week on the platform. It was initially suggested that we should simply measure the average number of videos recorded and watched per user. However, these metrics fit the description of those with long-tails that we should avoid wherever possible.

To combat this, I did some exploratory data analysis on the median number of videos watched and recorded within a user’s first week. Once I had identified this threshold of X videos watched and Y videos recorded, I recommended we simply measure the share of users with at least X videos watched and Y videos recorded in an effort to measure if we were pushing that median higher. This metric naturally curbed the influence of those power users, significantly reducing both variance and thus time-to-insight, while still measuring what the business was hoping to measure.

However, there are still cases when you’re going to need to summarize metrics with long-tailed distributions. The most common examples are revenue-related metrics. You’ll never convince a CFO that ‘Average Revenue per User’ is a sub-optimal metric. In these cases, handling outliers can significantly reduce variance. Although there are a lot of opinions on the best methods for handling outliers, ultimately business context should drive your decision as to which one should be used. But a common methodology used is winsorization.

Advanced statistical frameworks and methodologies can also help reduce experiment runtimes. Statistical tools like CUPED combine historical user data with your experiment data to reduce variance in your metrics, thereby reducing time-to-insight.Here at Eppo, we’ve seen some customers reduce their experiment runtimes by as much as 50% when using CUPED.

In addition to CUPED, A Sequential framework can be used independently from CUPED or combined with it to further reduce time-to-insight. A Sequential framework essentially assumes you’re going to peak at results every day and adjusts your confidence intervals accordingly to ensure false-positive rates don’t increase. This means that whenever you see a statistically significant result in your experiment, you can call the test. This is in contrast to the traditional Fixed-Sample paradigm, which says you must wait until you’ve reached a predetermined amount of sample size to call your experiment.